Statistics and its Types: Statistics is a branch of math focused on collecting, organizing, and understanding numerical data. It involves analyzing and interpreting data to solve real-life problems, using various quantitative models. Some view statistics as a separate scientific discipline rather than just a branch of math. It simplifies complex tasks and offers clear insights into regular activities. Statistics finds applications in diverse fields like weather forecasting, stock market analysis, insurance, betting, and data science.

In this article, we will learn about, What is Statistics, Types of Statistics, Models of Statistics, Statistics Examples, and others in detail.

What are Statistics?

Statistics in Mathematics is the study and manipulation of data. It involves the analysis of numerical data, enabling the extraction of meaningful conclusions from the collected and analyzed data sets.

- According to Merriam-Webster: Statistics is the science of collecting, analyzing, interpreting, and presenting masses of numerical data.

- According to Oxford English Dictionary: Statistics is a branch of mathematics dealing with the collection, analysis, interpretation, presentation, and organization of data.

Statistics

The practice or science of collecting and analysing numerical data in large quantities, especially for the purpose of inferring proportions in a whole from those in a representative sample.

Statistics Terminologies

Some of the most common terms you might come across in statistics are:

- Population: It is actually a collection of a set of individual objects or events whose properties are to be analyzed.

- Sample: It is the subset of a population.

- Variable: It is a characteristic that can have different values.

- Parameter: It is numerical characteristic of population.

Statistics Examples

Some real-life examples of statistics that you might have seen:

Example 1: In a class of 45 students, we calculate their mean marks to evaluate performance of that class.

Example 2: Before elections, you might have seen exit polls. Exit polls are opinion of population sample, that are used to predict election results.

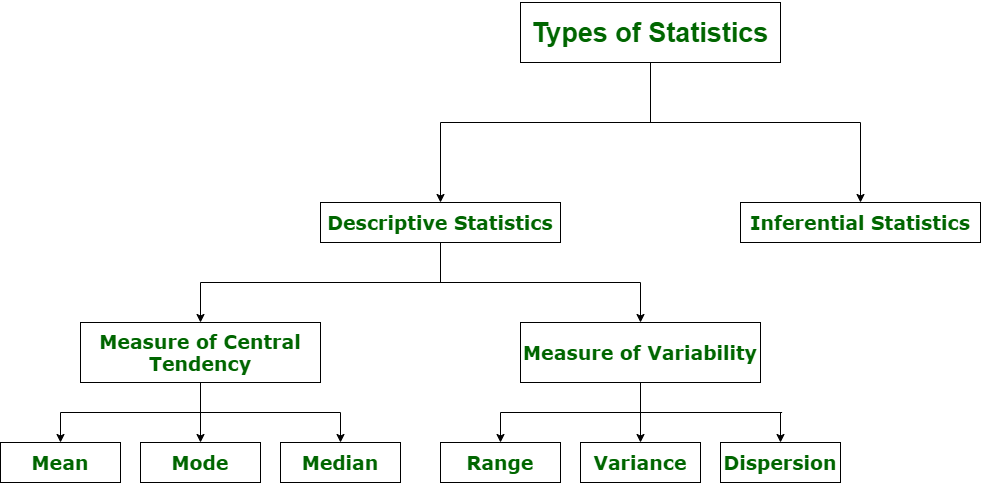

Types of Statistics

There are 2 types of statistics:

- Descriptive Statistics

- Inferential Statistics

Types of statistics is explained in the image added below:

Now let’s learn the same in detail.

Descriptive Statistics

Descriptive statistics uses data that provides a description of the population either through numerical calculated graphs or tables. It provides a graphical summary of data.

It is simply used for summarizing objects, etc. There are two categories in this as follows.

Let’s discuss both categories in detail.

Measure of Central Tendency

Measure of central tendency is also known as summary statistics that are used to represent the center point or a particular value of a data set or sample set. In statistics, there are three common measures of central tendency that are:

Statistics formula

| Statistic |

Formula |

Definition |

Example |

| Mean |

x̄=∑ x/n |

The arithmetic average of a set of values. It’s calculated by adding up all the values in the data set and dividing by the number of values. |

Example: Consider the data set {2, 4, 6, 8, 10}.

(2+4+6+8+10/ 5)

Mean = 5

|

| Median |

Middle value in an ordered data set |

The middle value of a data set when it is arranged in ascending or descending order. If there’s an odd number of observations, it’s the value at the center position. If there’s an even number, it’s the average of the two middle values. |

Example: Data set {3, 6, 9, 12, 15}. Median = 9 |

| Mode |

Value that appears most frequently in a data set |

The value that occurs most frequently in a data set. There can be one mode (unimodal), multiple modes (multimodal), or no mode if all values occur with the same frequency. |

Example: Data set {2, 3, 4, 4, 5, 6, 6, 6, 7}. Mode = 6 |

Mean

It is the measure of the average of all values in a sample set. The mean of the data set is calculated using the formula:

Mean = ∑x/n

For example:

|

Cars

|

Mileage

|

Cylinder

|

|

Swift

|

21.3

|

3

|

|

Verna

|

20.8

|

2

|

|

Santra

|

19

|

5

|

Mean = (Sum of all Terms)/(Total Number of Terms)

⇒ Mean = (21.3 + 20.8 + 19) /3 = 61.1/3

⇒ Mean = 20.366

Median

It is the measure of the central value of a sample set. In these, the data set is ordered from lowest to highest value and then finds the exact middle. The formula used to calculate the median of the data set is, suppose we are given ‘n’ terms in s data set:

If n is Even

- Median = [(n/2)th term + (n/2 + 1)th term]/2

If n is Odd

For example:

|

Cars

|

Mileage

|

Cylinder

|

|

Swift

|

21.3

|

3

|

|

Verna

|

20.8

|

2

|

|

Santra

|

19

|

5

|

| i 20 |

15 |

4 |

Data in Ascending order: 15, 19, 20.8, 21.3

⇒ Median = (20.8 + 19) /2 = 39.8/2

⇒ Median = 19.9

Mode

It is the value most frequently arrived in the sample set. The value repeated most of the time in the central set is actually mode. The mode of the data set is calculated using the formula:

Mode = Term with Highest Frequency

- For example: {2, 3, 4, 2, 4, 6, 4, 7, 7, 4, 2, 4}

- 4 is the most frequent term in this data set.

- Thus, mode is 4.

Measure of Variability

The measure of Variability is also known as the measure of dispersion and is used to describe variability in a sample or population. In statistics, there are three common measures of variability as shown below:

1. Range of Data

It is a given measure of how to spread apart values in a sample set or data set.

Range = Maximum value – Minimum value

2. Variance

In probability theory and statistics, variance measures a data set’s spread or dispersion. It is calculated by averaging the squared deviations from the mean. Variance is usually represented by the symbol σ2.

S2= ∑ni=1 [(xi – ͞x)2 / n]

- n represents total data points

- ͞x represents the mean of data points

- xi represents individual data points

Variance measures variability. The more spread out the data, the greater the variance compared to the average.

There are two types of variance:

- Population variance: Often represented as σ²

- Sample variance: Often represented as s².

Note: The standard deviation is the square root of the variance.

Dispersion

It is the measure of the dispersion of a set of data from its mean.

σ= √ (1/n) ∑ni=1 (xi – μ)2

Inferential Statistics

Inferential Statistics makes inferences and predictions about the population based on a sample of data taken from the population. It generalizes a large dataset and applies probabilities to draw a conclusion.

It is simply used for explaining the meaning of descriptive stats. It is simply used to analyze, interpret results, and draw conclusions. Inferential Statistics is mainly related to and associated with hypothesis testing whose main target is to reject the null hypothesis.

Hypothesis Testing

Hypothesis testing is a type of inferential procedure that takes the help of sample data to evaluate and assess the credibility of a hypothesis about a population.

Inferential statistics are generally used to determine how strong a relationship is within the sample. However, it is very difficult to obtain a population list and draw a random sample. Inferential statistics can be done with the help of various steps as given below:

- Obtain and start with a theory.

- Generate a research hypothesis.

- Operationalize or use variables

- Identify or find out the population to which we can apply study material.

- Generate or form a null hypothesis for these populations.

- Collect and gather a sample of children from the population and simply run a study.

- Then, perform all tests of statistical to clarify if the obtained characteristics of the sample are sufficiently different from what would be expected under the null hypothesis so that we can be able to find and reject the null hypothesis.

Types of Inferential Statistics

Various types of inferential statistics are used widely nowadays and are very easy to interpret. These are given below:

- One sample test of difference/One sample hypothesis test

- Confidence Interval

- Contingency Tables and Chi-Square Statistic

- T-test or Anova

- Pearson Correlation

- Bivariate Regression

- Multi-variate Regression

Data in Statistics

Data is the collection of numbers, words or anything that can be arranged to form a meaningful information. There are various types of the data in the statistics that are added below,

Types of Data

Various types of Data used in statistics are,

- Qualitative Data – Qualitative data is the descriptive data of any object. For example, Kabir is tall, Kaira is thin, etc.

- Quantitative Data – Quantitative data is the numerical data of any object. For example, he ate three chapatis, and we are five friends.

Types of Quantitative Data

We have two types of quantitative data that include,

- Discreate Data: The data that have fixed value is called discreate data, discreate data can easily be counted.

- Continuous Data: The data that has no fixed value and has a range of data is called continuous data. It can be measured.

Representation of Data

We can easily represent the data using various graphs, charts or tables. Various types of representing data set are:

Models of Statistics

Various models of Statistics are used to measure different forms of data. Some of the models of the statistics are added below:

- Skewness in Statistics

- ANOVA Statistics

- Degree of Freedom

- Regression Analysis

- Mean Deviation for Ungrouped Data

- Mean Deviation for Discrete Grouped data

- Exploratory Data Analysis

- Causal Analysis

- Standard Deviation

- Associational Statistical Analysis

Let’s learn about them in detail.

Skewness in Statistics

Skewness in statistics is defined as the measure of the asymmetry in a probability distribution that is used to measure the normal probability distribution of data.

Skewed data can be either positive or negative. If a data curve shifts from left to right is called positive skewed. If the curve moves towards the right to left it is called left skewed.

ANOVA Statistics

ANOVA statistics is another name for the Analysis of Variance in statistics. In the ANOVA model, we use the difference of the mean of the data set from the individual data set to measure the dispersion of the data set.

Analysis of Variance (ANOVA) is a set of statistical tools created by Ronald Fisher to compare means. It helps analyze differences among group averages. ANOVA looks at two kinds of variation: the differences between group averages and the differences within each group. The test tells us if there are disparities among the levels of the independent variable, though it doesn’t pinpoint which differences matter the most.

ANOVA relies on four key assumptions:

- Interval Scale: The dependent data should be measured at an interval scale, meaning the intervals between values are consistent.

- Normal Distribution: The population distribution should ideally be normal, resembling a bell curve.

- Homoscedasticity: This assumption states that the variances of the errors should be consistent across all levels of the independent variable.

- Multicollinearity: There shouldn’t be significant correlation among the independent variables, as this can skew results.

Additionally, ANOVA assumes homogeneity of variance, meaning all groups being compared have similar variance.

ANOVA calculates mean squares to determine the significance of factors (treatments). The treatment mean square is found by dividing the treatment sum of squares by the degrees of freedom.

It operates with a null hypothesis (H0) and an alternative hypothesis (H1). The null hypothesis generally posits that there’s no difference among the means of the samples, while the alternative hypothesis suggests at least one difference exists among the means of the samples.

Degree of Freedom

Degree of Freedom model in statistics measures the changes in the data set if there is a change in the value of the data set. We can move data in this model if we want to estimate any parameter of the data set.

Regression Analysis

Regression Analysis model of the statistics is used to determine the relation between the variables. It gives the relation between the dependent variable and the independent variable.

There are various types of regression analysis techniques:

- Linear regression: This method is used when the relationship between the variables is linear, meaning the change in the dependent variable is proportional to the change in the independent variable.

- Logistic regression: Used to predict categorical dependent variables, such as yes or no, true or false, or 0 or 1. It’s used in classification tasks, such as determining if a transaction is fraudulent or if an email is spam.

- Ridge regression: Ridge regression is a technique used to combat multicollinearity in linear regression models. It adds a penalty term to the regression equation to prevent overfitting. It’s most suitable when a data set contains more predictor variables than observations.

- Lasso regression: Similar to ridge regression, lasso regression also adds a penalty term to the regression equation. However, lasso regression tends to shrink some coefficients to zero, effectively performing variable selection.

- Polynomial regression: Uses polynomial functions to find the relationship between the dependent and independent variables. It can capture nonlinear relationships between variables, which may not be possible with simple linear regression.

- Bayesian linear regression: Bayesian linear regression incorporates Bayesian statistics into the linear regression framework. It allows for the estimation of parameters with uncertainty and the incorporation of prior knowledge into the regression model.

How to create chart and table

Choose the right chart type: Different chart types are better suited for different kinds of data. For example, bar charts are good for comparing categories, while line charts are good for showing trends over time.

Keep it simple: Don’t overload your chart with too much information. Make sure the labels are clear and easy to read.

Use clear and concise titles: Your chart title should accurately reflect the information being presented.

Use color effectively: Color can be a great way to highlight important data points, but avoid using too many colors or colors that clash.

Mean Deviation for Ungrouped Data

Suppose we are given ‘n’ terms in a data set x1, x2, x3, …, xn then the mean deviation about means and median is calculated using the formula,

Mean Deviation for Ungrouped Data = Sum of Deviation/Number of Observation

- Mean of Ungrouped Data = ∑in (x – μ)/n

Mean Deviation for Discrete Grouped data

Let there are x1, x2, x3, …, xn term and their respective frequency are, f1, f2, f3, …, fn then the mean is calculated using the formula,

a) Mean Deviation About Mean

Mean deviation about the mean of the data set is calculated using the formula,

- Mean Deviation (μ) = ∑i = 1n fi (xi – μ)/N

b) Mean Deviation About Median

Mean deviation about the median of the data set is calculated using the formula,

- Mean Deviation (μ) = ∑i = 1n fi (xi – M)/N

Exploratory Data Analysis

Exploratory data analysis (EDA) is a statistical approach for summarizing the main characteristics of data sets. It’s an important first step in any data analysis.

Here are some steps involved in EDA:

- Collect data

- Find and understand all variables

- Clean the dataset

- Identify correlated variables

- Choose the right statistical methods

- Visualize and analyze results

Exploratory Data Analysis (EDA) uses graphs and visual tools to spot overall trends and peculiarities in data. These can be anything from outliers, which are data points that stand out, to unexpected characteristics of the data set.

Following are the four types of EDA:

- Univariate non-graphical

- Multivariate non-graphical

- Univariate graphical

- Multivariate graphical

Causal Analysis

Causal analysis is a process that aims to identify and understand the causes and effects of a problem. It involves:

- Identifying the relevant variables and collecting data.

- Analyzing the data using statistical techniques to determine whether there is a significant relationship between the variables.

- Drawing conclusions about the causal relationship between the variables.

Causal analysis differs from simple correlation in that it investigates the underlying mechanisms and factors that drive changes in variable values, rather than simply finding statistical links. It provides evidence of the causal relationships between variables.

Let’s look at some examples where we might use causal analysis:

- We want to know if adding more fertilizer makes plants grow better.

- Can taking a specific medicine prevent someone from getting sick?

To figure out cause and effect, we often use experiments like giving different groups different treatments and comparing results. These types of studies, called “randomized controlled trials,” are usually the best way to show that something caused a change.

Sometimes, other things can interfere with our understanding of cause and effect. For example, two things might appear connected because they both come from a third factor. This confusion is known as “confounding.” When confounding happens, it can lead us to wrongly think that one thing caused another when it really didn’t.

Standard Deviation

Standard Deviation is a measure of how widely distributed a set of values are from the mean. It compares every data point to the average of all the data points.

A low standard deviation means values are close to the average, while a high standard deviation means values spread out over a wider range. Standard deviation is like the distance between points but applied differently. Using algebra on squares and square roots, rather than absolute values, makes standard deviation convenient in various mathematical applications.

Associational Statistical Analysis

Associational statistical analysis is a method that researchers use to identify correlations between many variables. It can also be used to examine whether researchers can draw conclusions and make predictions about one data set based on the features of another.

Associational analysis examines how two or more features are related while considering other possibly influencing factors.

Some measures of association are:

Chi-square Test for Association

Chi-square test of independence, also called the chi-square test of association, is a statistical method for determining the relationship between two variables that are categorical. It assesses if the variables are unrelated or related. Chi-square test determines the statistical significance of the relationship between variables rather than the intensity of the association. It determines if there is a substantial difference between the observed and expected data. By comparing the two datasets, we can determine whether the variables have a logical association.

For example, a chi-square test can be done to see if there is a statistically significant relationship between gender and the type of product bought. A p-value larger than 0.02 indicates that there is no statistically significant correlation.

Correlation Coefficient

A correlation coefficient is a numerical estimate of the statistical connection that exists between two variables. The variables could be two columns from a data set or two elements of a multivariate random variable.

The Pearson correlation coefficient (r) measures linear correlation, ranging from -1 to 1. It shows the strength and direction of the relationship between two variables. A coefficient of 0 means no linear relationship, while -1 or +1 indicates a perfect linear relationship.

Here are some examples of correlations:

- Positive linear correlation: When the variable on the x-axis rises as the variable on the y-axis increases.

- Negative linear correlation: When the values of the two variables move in opposite directions.

- Nonlinear correlation: Also called curvilinear correlation.

- No correlation: When the two variables are entirely independent.

Data Analysis

Data Analysis is all about making sense of information by using mathematical and logical methods. In simpler terms, it means looking carefully at lots of facts and figures, organizing them, summarizing them, and then checking to see if everything adds up correctly.

Types of Data Analysis

There are basically three types of data analysis which are as follows:

- Descriptive Analysis

- Predictive Analysis

- Prescriptive Analysis

Descriptive Analysis

Examining numerical data and calculations aids in comprehending business operations. Descriptive analysis serves various purposes such as:

- Assessing customer satisfaction

- Monitoring campaigns

- Producing reports

- Evaluating performance

Predictive Analysis

Uses historical data, statistical models, and machine learning algorithms to identify patterns and make predictions about future outcomes. Some applications of predictive analytics are:

- Sales Forecasting: Predictive analytics can help businesses forecast future sales trends based on historical data and market variables.

- Customer Churn Prediction: Businesses can use predictive analytics to identify customers who are likely to churn or stop using their services, allowing proactive measures to retain them.

- Healthcare Diagnostics: Predictive analytics can aid in diagnosing diseases and predicting patient outcomes based on medical history, symptoms, and other relevant data.

Prescriptive Analysis

Uses extensive methods and technologies to analyse data and information in order to determine the best course of action or plan.

For example, prescriptive analysis may suggest specific products to online customers based on their previous behaviour.

Coefficient of Variation

Coefficient of Variation is calculated using the formula,

CV = σ/μ × 100

Where,

- σ is Standard Deviation

- μ is Arithmetic Mean

Applications of Statistics

Various application of statistics in mathematics are added below,

- Statistics is used in mathematical computing.

- Statistics is used in finding probability and chances.

- Statistics is used in weather forcasting, etc.

Business Statistics

Business statistics is the process of collecting, analyzing, interpreting, and presenting data relevant to business operations and decision-making. It is a critical tool for organizations to gain insights into their performance, market dynamics, and customer behavior.

Scope of Statistics

Statistics is a branch of mathematics that deals with the collection, organization, analysis, interpretation, and presentation of data. It is used in a wide variety of fields, including:

- Science: Statistics is used to design experiments, analyze data, and draw conclusions about the natural world.

- Business: Statistics is used to market products, track sales, and make financial decisions.

- Government: Statistics is used to track economic trends, measure the effectiveness of government programs, and allocate resources.

- Healthcare: Statistics is used to develop new drugs, track the spread of diseases, and assess the effectiveness of medical treatments.

- Sports: Statistics is used to analyze player performance, scout new talent, and predict the outcome of games.

Limitations of Statistics

While statistics is a powerful tool, it is important to be aware of its limitations. Some of the most important limitations include:

- Data quality: The quality of statistical analysis is limited by the quality of the data. If the data is inaccurate, incomplete, or biased, the results of the analysis will also be inaccurate, incomplete, or biased.

- Sampling: Statistics is often based on samples of data, rather than the entire population. This means that the results of the analysis may not be generalizable to the entire population.

- Assumptions: Statistical methods often rely on assumptions about the data, such as the assumption that the data is normally distributed. If these assumptions are not met, the results of the analysis may be misleading.

- Causation vs. correlation: Statistics can show that two variables are correlated, but it cannot prove that one variable causes the other.

- Misinterpretation: Statistics can be misused or misinterpreted, leading to false conclusions.

Solved Problems – Statistics

Example 1: Find the mean of the data set.

Solution:

|

xi

|

fi

|

fixi

|

|

2

|

3

|

6

|

|

3

|

4

|

12

|

|

5

|

4

|

20

|

|

8

|

5

|

40

|

Mean = (Σf ixi)/Σfi

Σfixi = (6 + 12 + 20 + 40) = 78, and

Σfi = 16

⇒ Mean = 78/16 = 4.875

Example 2: Find Standard Deviation of 4, 7, 10, 13, and 16.

Solution:

Given,

- xi = 4, 7, 10, 13, 16

- N = 5

Σxi = (4 + 7 + 10 + 13 + 16) = 50

⇒ Mean(μ) = Σxi/N = 50/5 = 10

Standard Deviation = √(σ) = √{∑i = 1n (xi – μ)}/N

⇒ SD = √{1/5[(4 – 10)2 + (7 – 10)2 + (10 – 10)2 + (13 – 10)2 + (16 – 10)2]}

⇒ SD = √{1/5[36 + 9 + 0 + 9 + 36] = √{1/5[90]} = 18

Conclusion of Statistics

Statistics is not merely a collection of formulas; it’s a language that unlocks understanding across every discipline imaginable. From decoding the secrets of the universe to optimizing marketing campaigns, statistics plays a vital role. It empowers researchers in medicine, finance, engineering, and countless other fields to analyze patterns, measure risk, and predict future outcomes.

Statistics – FAQs

How to calculate mean?

The mean is the most common way to calculate the “average” of a data set.

- Formula: Mean = Σ(x_i) / n

- Σ (sigma) represents the sum of all the values (x_i) in the data set.

- n represents the total number of values in the data set.

How to calculate median?

The median is the ‘middle’ value when the data is ordered from least to greatest.

- If you have an odd number of data points, the median is the exact middle value.

- If you have an even number of data points, the median is the mean of the two middle values.

How to calculate mode?

The mode is the most frequent value in the data set. A data set can have multiple modes, or even no mode at all if all the values appear an equal number of times.

What do you mean by Statistics?

Statistics in mathematics is defined as the branch of science that deals with the number and is used to take find meaningful information of the data.

What are the types of statistics?

There are two basic types of statics that are,

- Descriptive statistics

- Inferential statistics

What is statistics and its application?

Statistics is a branch of mathematics that deals with the numbers and has various applications. Various applications of statistics are,

- It is used for mathematical computing.

- It is used for finding probability and chances.

- It is used for weather forecasting, etc.

What are the 3 main formulas in statistics?

The three common formulas of statistics are:

Who is father of statistics?

A British mathematician Sir Ronald Aylmer Fisher is regarded as the father of Statistics.

What are stages of statistics?

There are five stages of statistics that are,

- Problem

- Plan

- Data

- Analysis

- Conclusion

Who is known as father of Indian statistics?

Indian mathematician Prasanta Chandra Mahalanob is known as father of Indian Statistics.

Please Login to comment...